|

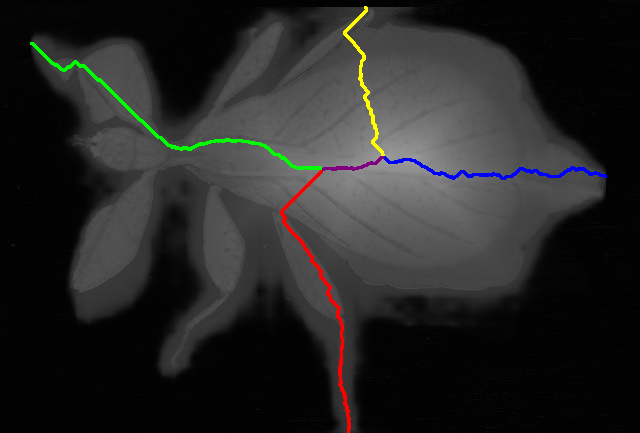

Débora E. C. Oliveira, Caio L. Demario, Paulo A.V. Miranda Image Segmentation by Relaxed Deep Extreme Cut with Connected Extreme Points, IAPR International Conference on Discrete Geometry and Mathematical Morphology (DGMM). May 2021, Sweden, accepted, to appear. AbstractIn this work, we propose a hybrid method for image segmentation based on the selection of four extreme points (leftmost, rightmost, top and bottom pixels at the object boundary), combining Deep Extreme Cut, a connectivity constraint for the extreme points, a marker-based color classifier from automatically estimated markers and a final relaxation procedure with the boundary polarity constraint, which is related to the extension of Random Walks to directed graphs as proposed by Singaraju et al. Its second constituent element presents theoretical contributions on how to optimally convert the 4 point boundary-based selection into connected region-based markers for image segmentation. The proposed method is able to correct imperfections from Deep Extreme Cut, leading to considerably improved results, in public datasets of natural images, with minimal user intervention (only four mouse clicks).

The ground truth databases of public images and the source code used to evaluate our method are available. We used four datasets of natural images:

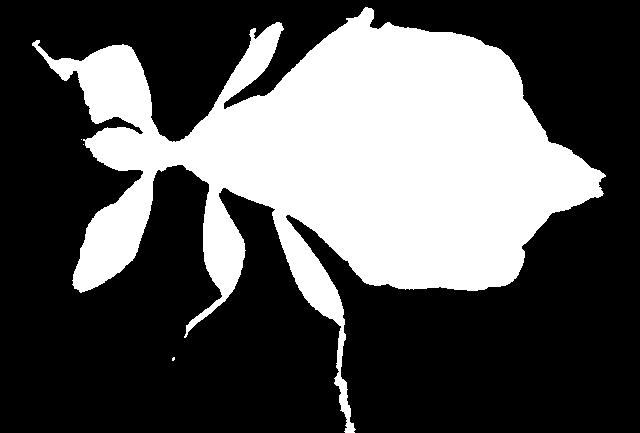

The pre-computed results by Deep Extreme Cut [1] and the extreme points, automatically computed from the ground truth images, for all datasets, as used in our experiments, are available here. Our source code is available here. The code was implemented in C/C++ language, compiled with gcc 9.3.0, and tested on a Linux operating system (Ubuntu 20.04.1 LTS 64-bit), running on an Intel® Core™ i5-10210U CPU @ 1.60GHz × 8 machine. The ImageMagick command-line tools are required to convert images from different formats. Below are some sample images of the NatImg21 dataset:

|